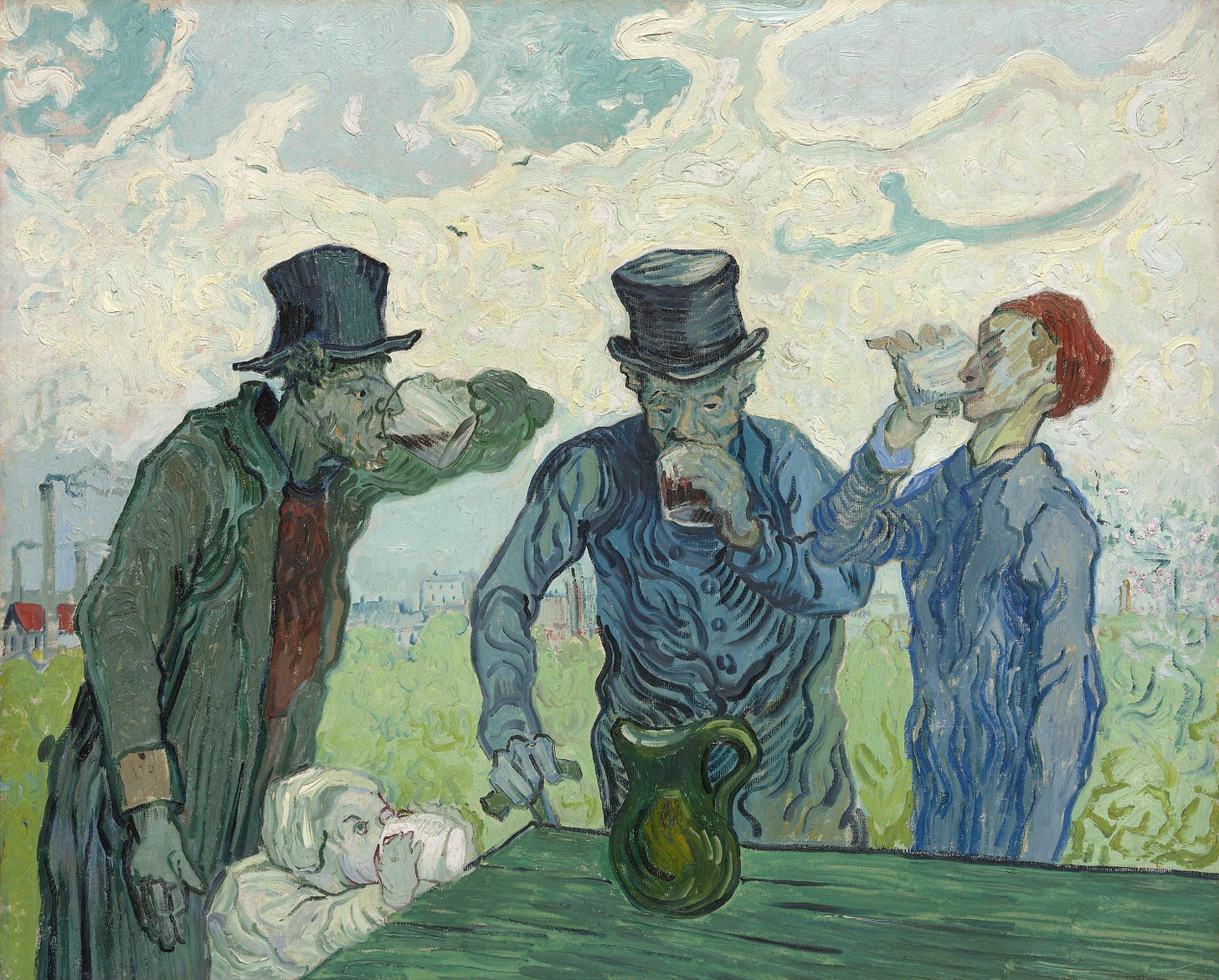

Americans used to drink like fish.

For an impressive example, look no further than the Father of Our Country. On September 14, 1787, near the close of the Constitutional Convention in Philadelphia, George Washington dined and drank at City Tavern as the guest of the “First Troop,” a cavalry corps that had crossed the Delaware with Washington and endured Valley Forge. And here, for the guest list of 55 gentlemen, is what they drank: 54 bottles of Madeira wine, 60 bottles of claret, 22 bottles of porter, 12 bottles of beer, 8 bottles of cider, and 7 large bowls of punch. No other details about the evening are available, but it seems safe to assume that a good time was had by all.

By the standards of the day, it doesn’t look like the evening was anything especially out of the ordinary. According to W. J. Rorabaugh’s The Alcoholic Republic, in the early 19th century Americans older than 14 averaged 7 gallons of 200-proof alcohol a year — well more than double the current U.S. average. In the 1820s, roughly half of all adult men were drinking at least six shots of liquor a day. Drinking water was widely considered unsafe so alcohol was served at every meal, liquor was cheap, and taverns served as centers of local social activity. Visitors from abroad regularly commented on Americans’ hard-drinking ways.

But by the middle of the 19th century, the situation was completely different. According to Rorabaugh, alcohol consumption had plummeted to under 2 gallons annually – below current usage rates. What could have happened to provoke such a dramatic change in the space of a couple of decades?

What happened was the American temperance movement, part of a much larger wave of moralizing and reform unleased by the religious revival of the Second Great Awakening. America had known religious enthusiasm since its earliest settlers, but by the early 19th century religiosity in the country was at a low ebb. The influence of the Enlightenment among the well-educated could be seen in the large number of deists among the Founding Fathers; meanwhile, as the country expanded westward, its frontier was wild and largely unchurched. In an 1822 letter to a friend, Thomas Jefferson predicted, “I trust there is not a young man now living in the Unites States who will not die a Unitarian.”

But even as Jefferson was writing those words, a spiritual revolution was in the offing. As I described it in The Age of Abundance:

During the early decades of the nineteenth century, a surge in evangelical fervor that came to be known as the Second Great Awakening swept over the young nation. In New England, Nathaniel Taylor and Lyman Beecher (Harriet’s father) spearheaded the development of “New School” Presbyterian theology. Charles Grandison Finney honed soul winning into a science with his massive revival campaigns in New York and the Midwest; Finney’s ministries were credited with achieving hundreds of thousands of conversions. Camp meetings in the South and the Appalachians sparked explosive growth of the Baptist and Methodist denominations. Between 1780 and 1820, Americans built 10,000 new churches; over the next four decades, they added 40,000 more.

The revitalized American Protestantism that emerged from the Second Great Awakening broke decisively with the Calvinist past. Specifically, it rejected Calvinism’s central dogma of predestination and asserted the individual’s free moral agency…. In its more optimistic strains, the new American religion went beyond mere free will to claim the possibility of human perfectibility…. “If the church will do all her duty,” Finney proclaimed in 1835, “the millennium may come in this country in three years.”

This massive mobilization of spiritual energy, fired by a vision that exalted bourgeois respectability as Christian virtue, inspired a series of moral crusades. Most noteworthy was the rise of the abolitionist movement, as anti-slavery sentiment shifted from support for gradual resettlement in Africa to campaigning for the immediate and unconditional freeing of slaves. William Lloyd Garrison started publishing The Liberator in 1831, and helped to found the American Anti-Slavery Society two years later. Within a mere five years, the Society had over a thousand local chapters and 250,000 members.

Other campaigns for moral uplift proliferated — against gambling, bear-baiting, and cockfighting; against public whipping and mutilation for crimes; against premarital sex (in New England, it’s estimated that one bride in three was pregnant in the late 1700s; by 1840 it was one bride in five or six); and last but not least, against the scourge of alcohol. The American Temperance Society was founded in 1826, and within a dozen years it boasted some 8,000 local groups and over a million members.

The key innovation of the new temperance movement was to see alcohol as inherently dangerous. Although drunkenness was always considered sinful, as was gluttony, alcohol was seen as no more blameworthy than food; the fault lay entirely with those who overindulged. “Drink itself is a good creature of God, and to be received with thankfulness,” declared the Puritan clergyman Increase Mather back in 1673, “but the abuse of drink is from Satan.”

Lyman Beecher, one of the co-founders of the American Temperance Society, delivered a series of sermons on “intemperance” in 1826, which were then published and went on to enjoy brisk sales for decades. He disputed the common opinion that someone who drinks regularly with no signs of intoxication is doing nothing wrong: “Whoever, to sustain the body, or invigorate the mind, or cheer the heart, applies habitually the stimulus of ardent spirits, does violence to the laws of his nature, puts the whole system into disorder, and is intemperate long before the intellect falters, or a muscle is unstrung.”

“The effect of ardent spirits on the brain, and the members of the body, is among the last effects of intemperance, and the least destructive part of the sin,” Beecher maintained. “It is the moral ruin which it works in the soul, that gives it the denomination of giant-wickedness.”

At the heart of alcohol’s evil, according to Beecher, is its effect on the will – that is, its addictiveness. An ardent abolitionist (recall he was the father of the author of Uncle Tom’s Cabin), he argued that submission to alcohol was another form of slavery:

The demand for artificial stimulus to supply the deficiencies of healthful aliment, is like the rage of thirst, and the ravenous demand of famine. It is famine: for the artificial excitement has become as essential now to strength and cheerfulness, as simple nutrition once was. But nature, taught by habit to require what once she did not need, demands gratification now with a decision inexorable as death, and to most men as irresistible.

We execrate the cruelties of the slave trade — the husband torn from the bosom of his wife—the son from his father — brothers and sisters separated forever — whole families in a moment ruined! But are there no similar enormities to be witnessed in the United States?

Every year thousands of families are robbed of fathers, brothers, husbands, friends. Every year widows and orphans are multiplied, and grey hairs are brought with sorrow to the grave — no disease makes such inroads upon families, blasts so many hopes, destroys so many lives, and causes so many mourners to go about the streets, because man goeth to his long home.

The Jacksonian-era temperance movement radicalized over time, calling for total abstinence rather than mere moderation and seeking legal prohibition to ban alcohol production and sales. But its greatest achievements came from its incrementalist moral suasion. Beecher started with his own profession, speaking out against drinking by the clergy. He moved on to campaign against employers serving liquor on the job: “It is not too much to be hoped, that the entire business of the nation by land and sea, shall yet move on without the aid of ardent spirits, and by the impulse alone of temperate freemen.” We can see in such tactics an analog to the “clear and hold” approach to anti-insurgency: pacifying the threat in some particular location, creating conditions that ensure the threat will not return, and using the momentum from such partial victories to carry the fight to other domains.

(I’ll note that the temperance movement petered out with the outbreak of the Civil War, but was reconstituted in the 1870s. This later temperance movement culminated in Prohibition — oops, a bridge too far. So I’m focusing here on the more modest, and more effective, earlier movement.)

The Second Great Awakening more generally, and the antebellum temperance movement in particular, hold important lessons for us today. First, they show us that — contrary to a pessimistic strain of opinion common on the right — a society’s “moral capital” is not just some inheritance from the pre-modern past that is inevitably drawn down as the old traditions fade. Broad-based moral regeneration can occur under the conditions of modernity, and it can be rapid and dramatic. More specifically, the temperance movement shows us how a free society can respond to the challenges of addictive activities that subvert individual autonomy. In a free society, we generally presume that people should be allowed to do what they want. But informed by the distinction between liberty and license, we recognize that sometimes we face a conflict between our “first order” and “second order” wants: we may simultaneously desperately want a drink and desperately want to be free of that desire. The temperance movement shows that education combined with moral suasion — raising awareness of the threat posed by some addictive activity, and liberally wielding praise and blame to incentivize right conduct — can be effective in keeping self-defeating abuses of freedom in check.

Today we are faced with a number of deepening social ills as a result of another species of intemperance — overindulgence in the consumption of mass media. According to Nielsen data, American adults averaged a little more than 11 hours a day consuming media in the first quarter of 2018: 4 hours, 46 minutes watching TV, 3 hours, 9 minutes using a smartphone or tablet, 1 hours, 46 minutes listening to radio (I think we can exempt that from our concern), 39 minutes using the internet on a computer, and 39 minutes playing games. As for young people, the New York Times reports that, as of 2019 (i.e., prior to the covid-related school closings that drove numbers even higher), the average amount of time staring at screens stood at 4 hours, 44 minutes a day for tweens (ages 8 to 12) and 7 hours and 22 minutes for teens (ages 13 to 18). It’s no wonder that the word “binge” now more commonly refers to TV viewing than to going on a bender.

These aggregate figures are eye-popping, and on their own reveal that all is far from well. Even if all the content consumed were wholesome and edifying, the sheer bulk of the time expended suggests serious problems of opportunity cost. And of course, we know that a great deal of media content is mental junk food at best and at worst can badly distort our sense of reality — whether through inundating us with images of airbrushed physical perfection that accentuate our own inadequacy, or by spreading conspiracy theories and other arrant nonsense. Accordingly, it is now widely accepted that current media habits are unhealthy and that screen time is associated with all kinds of negative side-effects — including obesity, loneliness, anxiety, and depression.

Let me make clear that I am far from puritanical about such matters. Over the course of my lifetime I’ve watched great gobs of TV, much of it eminently forgettable dreck, and these days I spend the bulk of my waking hours staring at a screen — mostly for work, but with lots of time-killing frolics and detours along the way. And yet it hasn’t stopped me from reading widely and deeply, traveling all over the world, and enjoying deep and abiding personal relationships. Indeed, shared media experiences with friends and loved ones have served to strengthen our bonds and now make for some of our fondest memories.

So I want to home in on four particular aspects of contemporary media consumption that I believe are especially problematic and in need of a concerted remedial response: (1) social media; (2) solitary consumption; (3) crowding out of deep literacy; and (4) media coverage of politics.

I won’t say much about social media, as so much has already been written and the issues are already so familiar. Social media sites promise to bring us together and strengthen our connections to friends and family, and they do sometimes deliver on that promise. It’s typical for those of us who were adults when Facebook hit the scene to have a longish list of folks from our past with whom we’ve reconnected; and for parents with kids, keeping grandparents, aunts and uncles, and family friends in the loop for all their various milestones is now almost effortless. Yet it has become painfully clear that social media has a deeply troubling dark side, especially for younger people. As I’ve written about previously, the past decade or so has seen a spike in mental health problems among adolescents, and the fact that this has occurred just as social media usage was taking off does not appear to be a coincidence. At the heart of the problem seems to be social media’s encouragement of “upward social comparison” — judging ourselves on the basis of a flood of highly selective and manipulated images and text designed to show people in the most flattering possible light.

This and other problems caused by social media aren’t just unfortunate side effects: the leading social media sites are designed to be unhealthy. They market themselves as helping people to connect, but their overriding priority is to keep us connected to the site no matter what. “Like” buttons, in particular, drive engagement through the dopamine hits we get when people respond positively to our posts. But the existence of those buttons turns social media into a ruthlessly precise, nonstop, global popularity contest, in which the vast bulk of us are destined to come out on the short end of the stick.

In much of life, what we’re doing matters less than whom we’re doing it with, and that’s true of consuming media as well. TV programming can be as shallow and mindless as you like, but watching and laughing and commenting with other people can make for an enjoyable and enriching social experience. Video games may be a huge time suck, but whiling away those hours with buddies isn’t time wasted in my book. The problem is that TV and the internet lend themselves so easily to solitary enjoyment — since they provide a kind of ersatz companionship. Think of old people living alone with the television constantly on in the background to chase away the silence; think of teenage girls sitting in their bedrooms, scrolling through Instagram for hours. Media usage makes it easier for people to withdraw from the world and avoid the real human contact they so badly need.

My Niskanen colleague Matt Yglesias wrote a good Substack post on this point titled “Sitting at home alone has become a lot less boring, and that might be bad.” Here’s a sample:

I’m obviously not going to give up the convenience of streaming home video, and neither are you.

But it is true that if my home viewing options were worse, I’d probably be inclined to ping friends a bit more frequently to see if they want to go see a movie. And those friends would probably be a bit more inclined to say yes to such invitations. They’d also probably be a bit more inclined to ping me about going to the movies….

And I’m inclined to say we’d probably all be better off for it.

To sit home, alone, and stream (which, to be clear, I do a lot!) is fun and easy and convenient. But it strikes me as potentially fun and easy and convenient in the same sense that it’s easy and convenient to not exercise or fun and easy to gorge yourself on Pringles. We’re weak creatures and can be easily tempted into patterns of behavior that we would reject if we had the ability to program our short-term behavior to align with our long-term goals.

It's not just logistical convenience that links media consumption to self-isolation – it’s psychological, emotional convenience as well. Real, flesh-and-blood people are sometimes moody, sometimes demanding, sometimes boring, sometimes annoying; real people can’t interact with each other over the long term without some friction. By contrast, TV characters never argue with you, porn stars never reject you, you can restart games at the precise spot you messed up, and you can mute yourself and disappear from social media the second anything gets boring or uncomfortable. The more habituated you get to this undemanding, frictionless substitute for genuine social interaction, the more difficult and burdensome the real thing can start to seem. (For more on this, read my earlier essay “Choosing the Experience Machine,” and in particular check out the excellent Freddie deBoer essay I discussed there titled “You are You. We Live Here. This Is Now.”

Excessive media consumption isn’t just making us fat, lonely, anxious, and depressed; it’s making us stupider as well. I’ve also written about this before, so I won’t belabor the point; instead let me once again point you to my friend Adam Garfinkle’s essay “The Erosion of Deep Literacy,” as well as Neil Postman’s decades-old but still well-aimed jeremiad Amusing Ourselves to Death. The bottom line is that the cognitive culture of print is much intellectually deeper, richer, more complex, and more demanding than the cognitive cultures of either television or the internet. Reading books requires sustained focus and attention, logical thinking to follow and assess elaborate exposition and argument. TV viewing, by contrast, is passive, unfocused, carried along by moving images and emotional cues, while internet scrolling confronts our fractured, overloaded attention with jumbled mishmashes of disconnected information and hot-button stimulus. I believe that we can see our passage to a post-literate culture in the 21st century phenomenon of the “reverse Flynn effect”: after a sustained rise in raw IQ scores in advanced countries from the 1930s through the 90s (discovered by IQ researcher James Flynn and named after him), more recently the trend is going in the opposite direction. In my view, the original Flynn effect reflected our adaptation to the more complex and cognitively challenging environment of technology- and organization-intensive industrialism; brains trained on Tik Tok videos and emojis are generally not going to be as capable at maintaining focus and reasoning abstractly.

Finally, our current media environment is flatly inconsistent with the healthy operation of democratic politics. Here again, this is ground I’ve covered already. The fundamental problem is an ineradicable conflict between the profit motive and democracy’s need for a well-informed public. This conflict can be managed well enough when media competition is restrained (as it happened to be, in both newspapers and broadcast, during the first three-quarters of the 20th century); but when, as now, there is intense competition for readers’ and viewers’ attention, media providers will inevitably stoop to conquer. That is, they will be driven to transform the coverage of public affairs into another species of entertainment, offering up hot-button sensationalism, cartoonish contrasts of black and white, and an overriding focus on the competitive drama of who’s up and who’s down instead of on the substance of governance.

More specifically, it remains underappreciated the degree to which the rise of contemporary authoritarian populism here in the United States was the creation of a small group of media entrepreneurs. First talk radio, then Fox News, then the right-wing internet used technological innovation to reach audiences not aligned with the centrist liberalism of the old media establishment; they courted and built that audience by pandering to its prejudices and offering up a steady red-meat diet of outrages and demonization. Decades of such pandering created the contemporary GOP base, totally detached from consensus reality and demanding ever-more lurid political theater. Once Trump came on the scene, mainstream media took the bait and, cashing in on the intense media interest that right-wing demagoguery generated, cast themselves as leaders of the “resistance” and offered counter-theater to thrill their progressive constituents. CBS Chairman Les Moonves said the quiet part out loud when he admitted of Trump’s candidacy, “It may not be good for America, but it’s damn good for CBS.”

Here then are my proposed targets for a 21st century media temperance movement: (1) social media use, especially among young people; (2) solitary media consumption; (3) the decline in reading the printed page; and (4) political infotainment. While these are the specific abuses that the movement would seek to remedy, the broader campaign should be to instill in the public, or at least a critical mass of the educated public, a healthy suspicion of virtual experience in general. Just as the antebellum temperance movement changed attitudes about alcohol, using moral suasion to reveal it as inherently addictive and dangerous, so a modern-day movement needs to raise public awareness of the addictive spell that virtual experience can cast on us — and the cognitively compromised nature of that virtual experience. Every time we switch off the real world to tune in the mediated one, we should feel a little twinge of discomfort, as if we’d stepped into a disreputable dive bar in the middle of the day. We should be on our guard.

With regard to the specific abuses I’ve enumerated, the movement should aim to be ambitious, starting with changing attitudes and moving on to changing actual habits. Age limits for access to social media should be raised and enforced; parents should see teen phone use in the same light as teen drinking. Initiatives should be launched to design non-addictive social media sites that respect user privacy and autonomy. The general sense of guardedness toward the virtual world should be heightened when entering it alone; excessive solitary media consumption should be regarded as worrisome, while family viewing time and neighborhood watch parties should be celebrated and encouraged. Book reading should be promoted for all ages, with sponsorship of book clubs and public book reading events and public service messages featuring media celebrities as class traitors. Online “political hobbyism” should be stigmatized as vulgar and creepy; cable news viewing of whatever ideological stripe should be actively discouraged through organized boycotts.

Could such a thing actually come to pass? The raw materials for a media temperance movement are already lying around at hand: there is widespread awareness of the general problem, and there are already numerous groups actively working on many of the movement goals I mentioned above. Whether these disconnected responses can mobilize into a coherent and energetic movement is anybody’s guess. But this much seems clear: it’s hard to picture a dramatically better society without a dramatically improved media environment.

I live in a small town where people are not quite so socially isolated as they are said to be in some cities. Reading this, I was beginning to feel that it was "someone else's problem" until I started reflecting on the fact that when my wife and go to out weekly neighborhood game night to play old-fashioned word games, nearly everyone keeps their phones turned on and out on the table. The conversation often consists either of comments on what has just arrived on their screens and counter-comments illustrated with material instantly searched up on the same screens. What I find odd about this is that everyone at the table considers this normal. And keep in mind that this is not a young crowd, but rather, a bunch of retired boomers.

One idea: A study of how media consumption affects markers of success in life. I understand there's a "chicken and egg" issue, but if parents knew that their kids consumption of media was statistically correlated with college, income. marriage, etc. that might be powerful.